Self-Hosting Cognee: Choosing LLM on Ollama

Testing Cognee with local LLMs - real results

Cognee is a Python framework for building knowledge graphs from documents using LLMs. But does it work with self-hosted models?

Testing Cognee with local LLMs - real results

Cognee is a Python framework for building knowledge graphs from documents using LLMs. But does it work with self-hosted models?

Thoughts on LLMs for self-hosted Cognee

Choosing the Best LLM for Cognee demands balancing graph-building quality, hallucination rates, and hardware constraints. Cognee excels with larger, low-hallucination models (32B+) via Ollama but mid-size options work for lighter setups.

Build AI search agents with Python and Ollama

Ollama’s Python library now includes native OLlama web search capabilities. With just a few lines of code, you can augment your local LLMs with real-time information from the web, reducing hallucinations and improving accuracy.

Pick the right vector DB for your RAG stack

Choosing the right vector store can make or break your RAG application’s performance, cost, and scalability. This comprehensive comparison covers the most popular options in 2024-2025.

Build AI search agents with Go and Ollama

Ollama’s Web Search API lets you augment local LLMs with real-time web information. This guide shows you how to implement web search capabilities in Go, from simple API calls to full-featured search agents.

Master local LLM deployment with 12+ tools compared

Local deployment of LLMs has become increasingly popular as developers and organizations seek enhanced privacy, reduced latency, and greater control over their AI infrastructure.

Deploy enterprise AI on budget hardware with open models

The democratization of AI is here. With open-source LLMs like Llama 3, Mixtral, and Qwen now rivaling proprietary models, teams can build powerful AI infrastructure using consumer hardware - slashing costs while maintaining complete control over data privacy and deployment.

LongRAG, Self-RAG, GraphRAG - Next-gen techniques

Retrieval-Augmented Generation (RAG) has evolved far beyond simple vector similarity search. LongRAG, Self-RAG, and GraphRAG represent the cutting edge of these capabilities.

Cut LLM costs by 80% with smart token optimization

Token optimization is the critical skill separating cost-effective LLM applications from budget-draining experiments.

Python for converting HTML to clean, LLM-ready Markdown

Converting HTML to Markdown is a fundamental task in modern development workflows, particularly when preparing web content for Large Language Models (LLMs), documentation systems, or static site generators like Hugo.

Integrate Ollama with Go: SDK guide, examples, and production best practices.

This guide provides a comprehensive overview of available Go SDKs for Ollama and compares their feature sets.

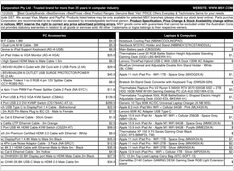

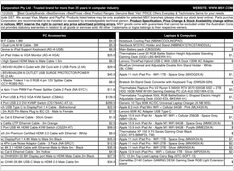

Comparing Speed, parameters and performance of these two models

Here is a comparison between Qwen3:30b and GPT-OSS:20b focusing on instruction following and performance parameters, specs and speed:

+ Specific Examples Using Thinking LLMs

In this post, we’ll explore two ways to connect your Python application to Ollama: 1. Via HTTP REST API; 2. Via the official Ollama Python library.

Slightly different APIs require special approach.

Here’s a side-by-side support comparison of structured output (getting reliable JSON back) across popular LLM providers, plus minimal Python examples

A couple of ways to get structured output from Ollama

Large Language Models (LLMs) are powerful, but in production we rarely want free-form paragraphs. Instead, we want predictable data: attributes, facts, or structured objects you can feed into an app. That’s LLM Structured Output.

Implementing RAG? Here are some Go code bits - 2...

Since standard Ollama doesn’t have a direct rerank API, you’ll need to implement reranking using Qwen3 Reranker in GO by generating embeddings for query-document pairs and scoring them.