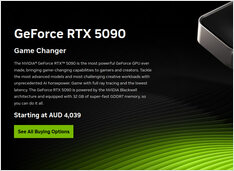

GPU and RAM Prices Surge in Australia: RTX 5090 Up 15%, RAM Up 38% - January 2026

January 2025 GPU and RAM price check

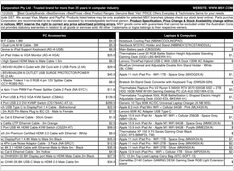

Today we are looking at the top-level consumer GPUs, and RAM modules. Specifically I’m looking at RTX-5080 and RTX-5090 prices, and 32GB (2x16GB) DDR5 6000.